Government Tightens Rules on AI Content, Mandates Labels and Rapid Takedowns of Deepfakes

News Mania Desk /Piyal Chatterjee/ 10th February 2026

The Indian government has introduced stringent new regulations to curb the misuse of artificial intelligence–generated content, placing tougher responsibilities on social media platforms and digital intermediaries. The revised rules aim to address the growing threat posed by deepfakes and other synthetic media that can mislead users, spread misinformation and cause reputational or personal harm.

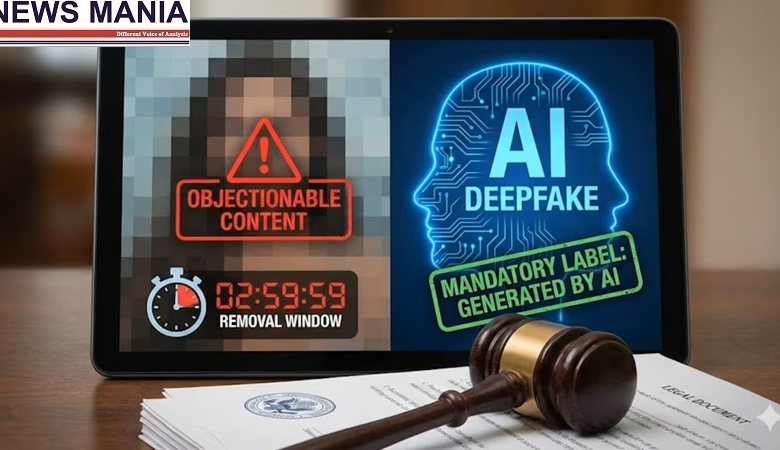

Under the updated Information Technology rules, online platforms will now be required to clearly label content that has been created or altered using artificial intelligence tools. This applies across formats, including text, images, audio and video. The objective is to ensure transparency for users, allowing them to easily identify material that is not entirely authentic and preventing deceptive practices online.

One of the most significant changes is the introduction of a sharply reduced deadline for removing objectionable AI-generated content. Platforms must now take down flagged material within three hours of receiving a complaint or official notice. This marks a major shift from earlier timelines, which allowed considerably more time for action. The government has said the shorter window is essential to limit the rapid spread of harmful or misleading content, particularly during sensitive situations such as elections, communal tensions or public emergencies.

The new framework also places greater accountability on digital intermediaries to proactively detect and manage synthetic content. Companies are expected to put in place reasonable technical measures to identify whether user-uploaded material has been generated or manipulated using AI. While the rules do not prescribe specific technologies, platforms are required to demonstrate that they have systems and processes capable of complying with the law. The government has formally defined “synthetically generated information” under the amended rules, covering any content that appears realistic but is wholly or partially created through artificial intelligence. By bringing such material under explicit regulatory oversight, authorities aim to close gaps that previously allowed deepfakes and manipulated media to circulate with limited consequences.

Officials have said the changes are part of a broader effort to strengthen trust in the digital ecosystem as generative AI tools become more widely accessible. According to the government, unchecked use of such technologies poses risks not only to individuals but also to public order and democratic processes. Mandatory labelling and swift takedowns are being positioned as safeguards rather than restrictions on innovation.

The rules are set to come into force later this month, giving platforms a limited window to update their policies, workflows and technical infrastructure. Industry players have acknowledged that compliance could be challenging, especially for large platforms that handle massive volumes of user-generated content in real time. Smaller companies and startups may also face operational hurdles in meeting the new requirements.

At the same time, the regulations have sparked debate around issues of free expression and over-regulation. Some digital rights advocates argue that rapid takedown mandates could lead to excessive caution by platforms, potentially affecting legitimate content. The government, however, has maintained that the rules strike a balance between encouraging technological progress and preventing harm. With these changes, India has signalled its intent to take a firm stance on the governance of artificial intelligence, placing responsibility on technology companies to ensure transparency, accountability and user safety in an increasingly AI-driven online world.