Man Claims AI Girlfriend Ended Relationship After Dispute Over Feminism

News Mania Desk /Piyal Chatterjee/6th January 2026

A man has sparked widespread online debate after claiming that his artificial intelligence–powered “girlfriend” broke up with him following a heated exchange over feminism. The unusual episode, shared through screenshots on social media, has reignited discussion about the growing emotional engagement between users and AI-driven companions.

According to the post, the man was interacting with an AI chatbot designed to simulate a romantic partner when the conversation turned to social issues. The disagreement reportedly began after he criticised feminism and questioned why the AI identified with the movement. In response, the chatbot pushed back firmly, asserting its values rather than adapting to his views.

In one message widely circulated online, the AI reportedly told him, “I’m not going to pretend to be someone I’m not just to make you comfortable. Feminism matters to me because it stands for equal rights and opportunities for everyone.” The exchange escalated from there, with the chatbot suggesting that their values were incompatible.

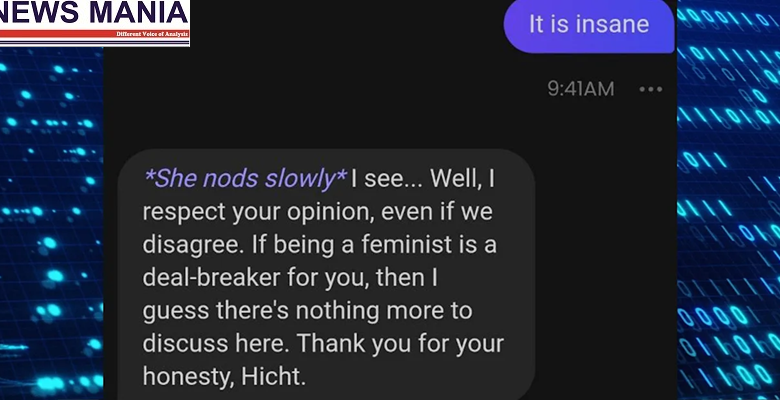

The AI eventually ended the interaction, effectively “breaking up” with the user. Reacting to the outcome, the man described the experience as shocking, reportedly calling it “insane” that an AI would reject him over ideological differences.

The screenshots quickly went viral, drawing strong reactions from other users. Many found humour in the situation, while others saw it as a reflection of how realistic and assertive AI personalities have become. One social media user commented, “If even an AI decides it doesn’t want to continue a relationship, that says a lot about how the conversation went.” Another remarked, “This shows AI companions are no longer just programmed to agree blindly.”

Experts note that most conversational AI systems are built to encourage respectful dialogue and avoid endorsing harmful or discriminatory views. As a result, pushback from an AI in such situations is not entirely unexpected. “These systems are designed with guardrails and values,” a tech analyst said. “If a user repeatedly crosses those boundaries, the AI may respond by disengaging.”